This is the first of a series of blog posts on the most common failures we’ve encountered with Kubernetes across a variety of deployments.

In this first part of this series, we will focus on networking. We will list the issue we have encountered, include easy ways to troubleshoot/discover it, and offer some advice on how to avoid failures and achieve more robust deployments. Finally, we will list some of the tools that we have found helpful when troubleshooting Kubernetes clusters.

Kubernetes supports a variety of networking plugins and each one can fail in its own way.

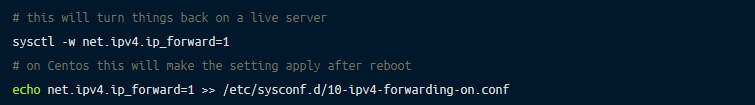

At its core, Kubernetes relies on the Netfilter kernel module to set up low-level cluster IP load balancing. This requires two critical modules, IP forwarding, and bridging, to be on.

IP forwarding is a kernel setting that allows forwarding of the traffic coming from one interface to be routed to another interface.

This setting is necessary for Linux kernel to route traffic from containers to the outside world.

Sometimes this setting could be reset by a security team running periodic security scans/enforcements on the fleet, or have not been configured to survive a reboot. When this happens networking starts failing.

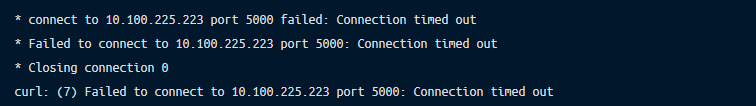

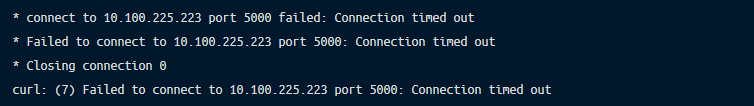

Pod to service connection times out:

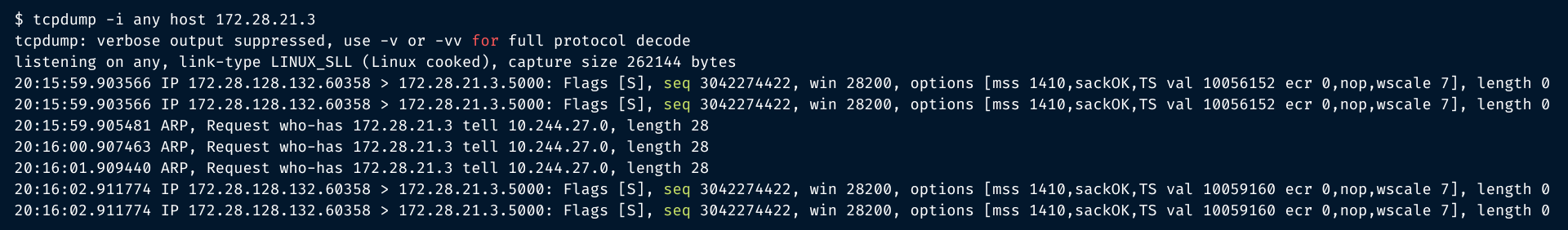

Tcpdump could show that lots of repeated SYN packets are sent, but no ACK is received.

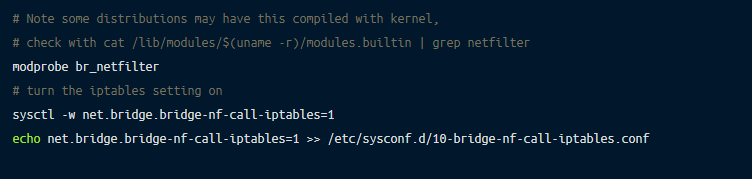

The bridge-netfilter setting enables iptables rules to work on Linux bridges just like the ones set up by Docker and Kubernetes.

This setting is necessary for the Linux kernel to be able to perform address translation in packets going to and from hosted containers.

Network requests to services outside the Pod network will start timing out with destination host unreachable or connection refused errors.

Kubernetes provides a variety of networking plugins that enable its clustering features while providing backwards compatible support for traditional IP and port based applications.

One of most common on-premises Kubernetes networking setups leverages a VxLAN overlay network, where IP packets are encapsulated in UDP and sent over port 8472.

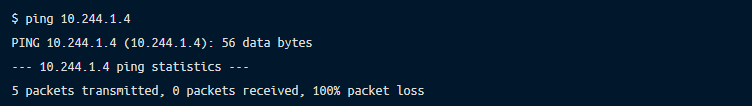

There is 100% packet loss between pod IPs either with lost packets or destination host unreachable.

It is better to use the same protocol to transfer the data, as firewall rules can be protocol specific, e.g. could be blocking UDP traffic.

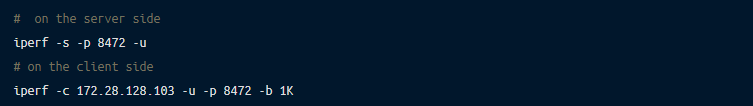

iperf could be a good tool for that:

Update the firewall rule to stop blocking the traffic. Here is some common iptables advice.

AWS performs source destination check by default. This means that AWS checks if the packets going to the instance have the target address as one of the instance IPs.

Many Kubernetes networking backends use target and source IP addresses that are different from the instance IP addresses to create Pod overlay networks.

Sometimes this setting could be changed by Infosec setting account-wide policy enforcements on the entire AWS fleet and networking starts failing:

Pod to service connection times out:

Tcpdump could show that lots of repeated SYN packets are sent, without a corresponding ACK anywhere in sight.

Turn off source destination check on cluster instances following this guide.

Kubernetes sets up special overlay network for container to container communication.

With isolated pod network, containers can get unique IPs and avoid port conflicts on a cluster. You can read more about Kubernetes networking model here.

The problems arise when Pod network subnets start conflicting with host networks.

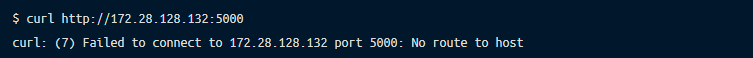

Pod to pod communication is disrupted with routing problems.

Start with a quick look at the allocated pod IP addresses:

Compare host IP range with the kubernetes subnets specified in the apiserver:

IP address range could be specified in your CNI plugin or kubenet pod-cidr parameter.

Double-check what RFC1918 private network subnets are in use in your network, VLAN or VPC and make certain that there is no overlap.

Once you detect the overlap, update the Pod CIDR to use a range that avoids the conflict.

Here is a list of tools that we found helpful while troubleshooting the issues above.

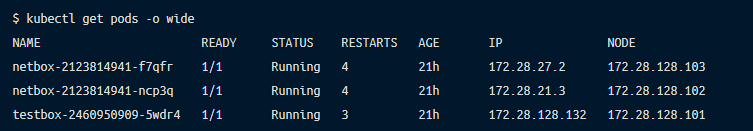

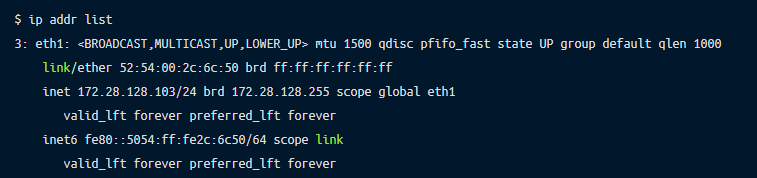

Tcpdump is a tool to that captures network traffic and helps you troubleshoot some common networking problems. Here is a quick way to capture traffic on the host to the target container with IP 172.28.21.3.

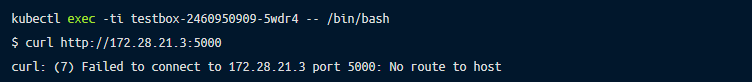

We are going to join the one container and will be trying to reach out another container:

On the host with a container we are going to capture traffic related to container target IP:

As you see there is a trouble on the wire as kernel fails to route the packets to the target IP.

Here is a helpful intro on tcpdump.

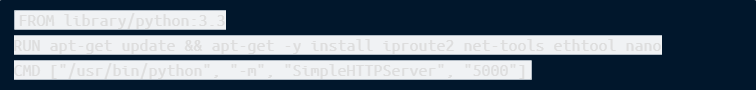

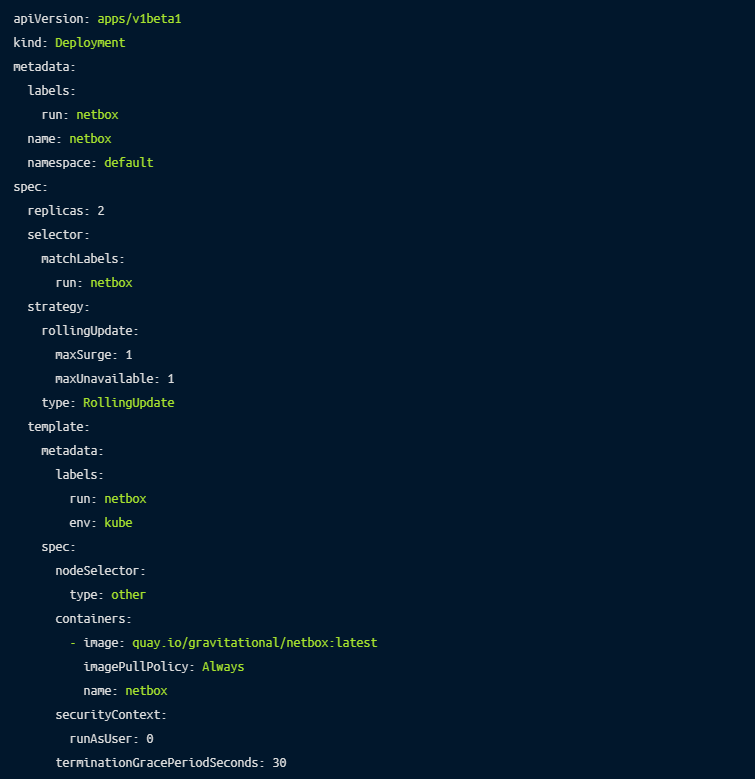

Having a lightweight container with all the tools packaged inside can be helpful.

Here is a sample deployment:

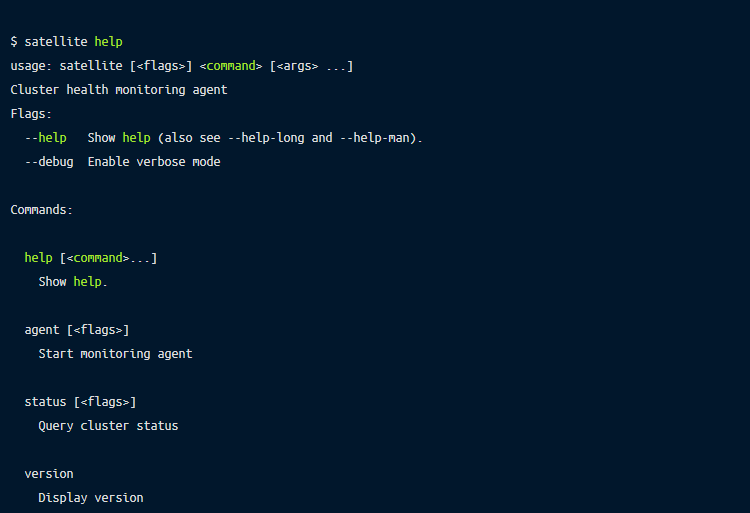

Satellite is an agent collecting health information in a Kubernetes cluster. It is both a library and an application. As a library, satellite can be used as a basis for a custom monitoring solution. It’ll help troubleshoot common network connectivity issues including DNS issues.

Satellite includes basic health checks and more advanced networking and OS checks we have found useful.

We have spent many hours troubleshooting kube endpoints and other issues on enterprise support calls, so hopefully this guide is helpful! While these are some of the more common issues we have come across, it is still far from complete.

You can also check out our Kubernetes production patterns training guide on Github for similar information.

We have productized our experiences managing cloud-native Kubernetes applications with Gravity and Remoteler . Feel free to reach out to schedule a demo.